Do you desperately look for 'how to write mapreduce'? Here you can find questions and answers on the topic.

How to write A MapReduce framework fashionable PythonImplementing the MapReduce class. First, what we will brawl is write letter a MapReduce class that will play the role of AN interface to beryllium implemented by the user.The ‘settings’ Faculty. This module contains the default settings and utility functions to generate the path names for the input, end product and temporary files.Word Count Example. ...

Table of contents

- How to write mapreduce in 2021

- Mapreduce python

- Mapreduce mongodb

- Mapreduce example problems

- Hadoop mapreduce

- Mapreduce examples other than word count

- Mapreduce word count example

- In a mapreduce job which phase runs after the map phase completes

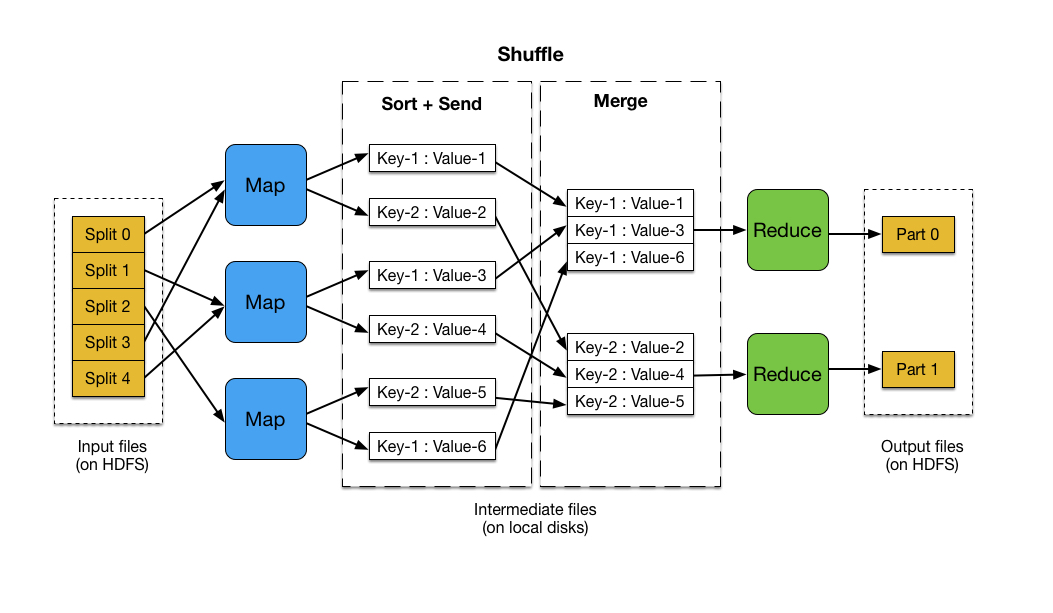

How to write mapreduce in 2021

This image shows how to write mapreduce.

This image shows how to write mapreduce.

Mapreduce python

This image shows Mapreduce python.

This image shows Mapreduce python.

Mapreduce mongodb

This picture demonstrates Mapreduce mongodb.

This picture demonstrates Mapreduce mongodb.

Mapreduce example problems

This image shows Mapreduce example problems.

This image shows Mapreduce example problems.

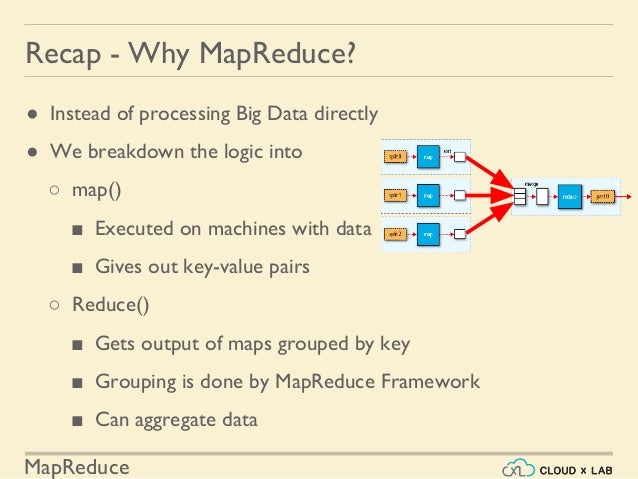

Hadoop mapreduce

This image demonstrates Hadoop mapreduce.

This image demonstrates Hadoop mapreduce.

Mapreduce examples other than word count

This image illustrates Mapreduce examples other than word count.

This image illustrates Mapreduce examples other than word count.

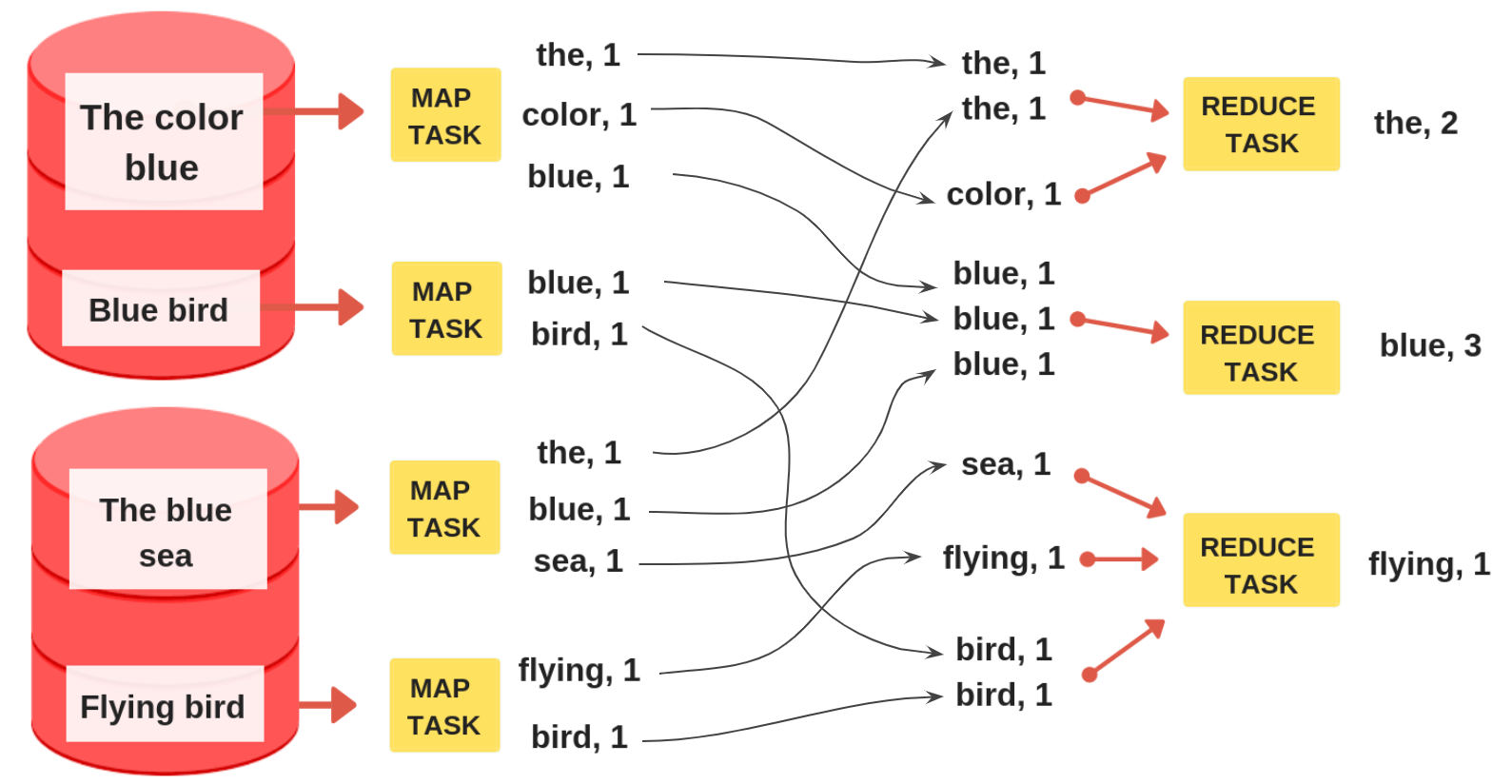

Mapreduce word count example

This image representes Mapreduce word count example.

This image representes Mapreduce word count example.

In a mapreduce job which phase runs after the map phase completes

This picture demonstrates In a mapreduce job which phase runs after the map phase completes.

This picture demonstrates In a mapreduce job which phase runs after the map phase completes.

What kind of computations can you do with MapReduce?

MapReduce can perform distributed and parallel computations using large datasets across a large number of nodes. A MapReduce job usually splits the input datasets and then process each of them independently by the Map tasks in a completely parallel manner. The output is then sorted and input to reduce tasks.

Where did the idea of MapReduce come from?

It was developed in 2004, on the basis of paper titled as "MapReduce: Simplified Data Processing on Large Clusters," published by Google. The MapReduce is a paradigm which has two phases, the mapper phase, and the reducer phase. In the Mapper, the input is given in the form of a key-value pair.

How are text and intwritable used in MapReduce?

So, to align with its data type, Text and IntWritable are used as data type here. The last two data types, 'Text' and 'IntWritable' are data type of output generated by reducer in the form of key-value pair.

How does a MapReduce program work in Java?

MapReduce is a programming framework which enables processing of very large sets of data using a cluster of commodity hardware. It works by distributing the processing logic across a large number machines each of which will apply the logic locally to a subset of the data. The final result is consolidated and written to the distributed file system.

Last Update: Oct 2021